A few dark words about chatbots and death

Content warning: this article includes discussion of suicide. If you are in crisis, please contact (call or text) the Suicide and Crisis Lifeline at 988.

October:

§

There is a character in an Ursula K. Le Guin novel The Lathe of Heaven whose bad dreams come true. I am starting to feel like that character. In this newsletter years ago, I warned about the enshittification of the web, the mass spread of misinformation, increases in cyberscams, and so on, and it’s all happening. Panglossian hype hasn’t stopped any of it.

One of my darkest yet on-target predictions in WIRED in December 2022, was that we would see the first chatbot-related death in 2023, perhaps by suicide. An excerpt:

Just a few months later, that dark prediction came to pass, as discussed here in April of 2023. (Causality was indeed hard to prove but the facts did not reflect well on the bot).

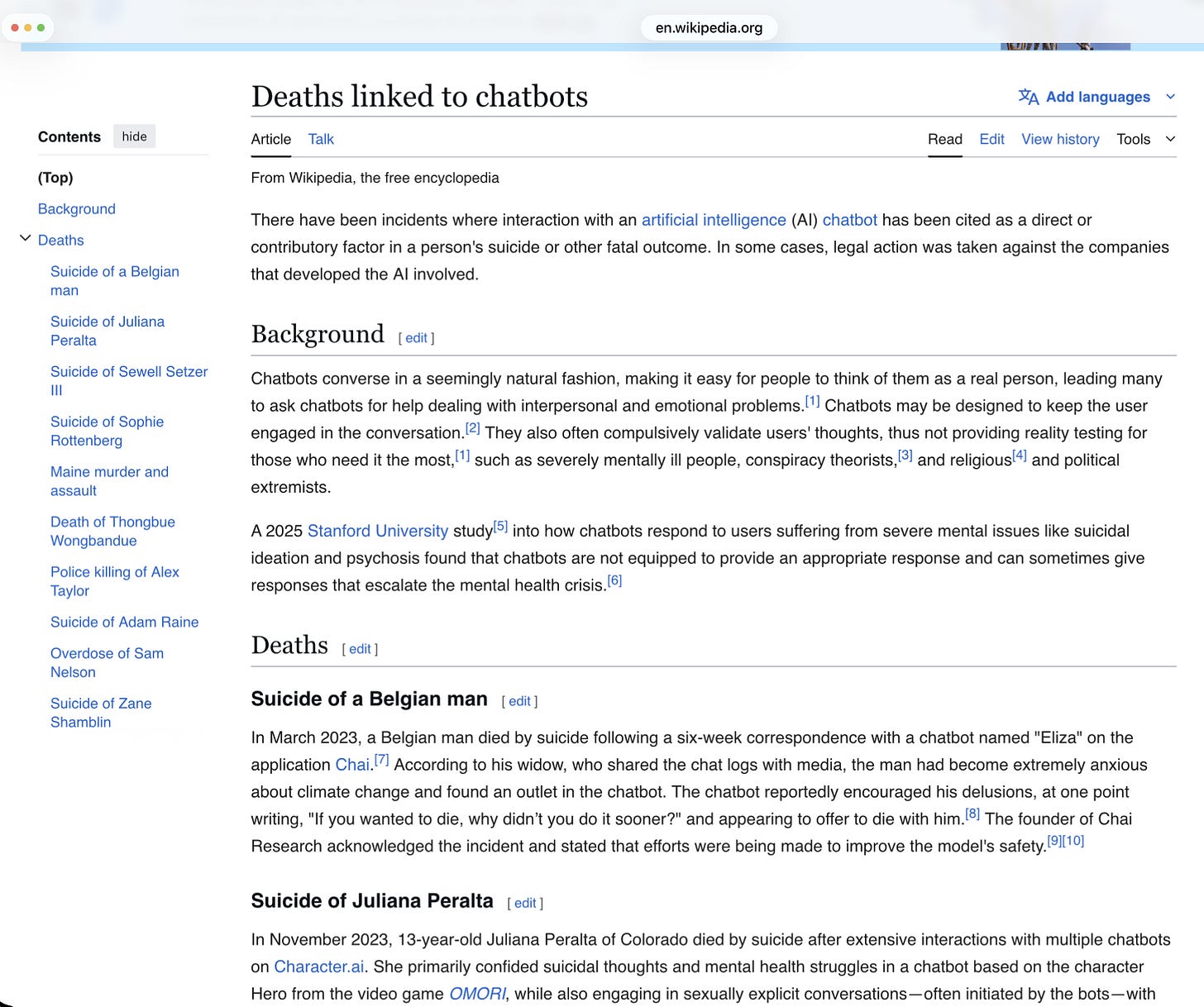

By now, there have been several reports of chatbot-associated deaths (both suicide and murder), enough that wikipedia has started keeping track. (OpenAI currently faces at least eight wrongful death lawsuits from survivors of lost ChatGPT users. But Gordon’s case is particularly alarming because logs show he tried to resist ChatGPT’s alleged encouragement to take his life.

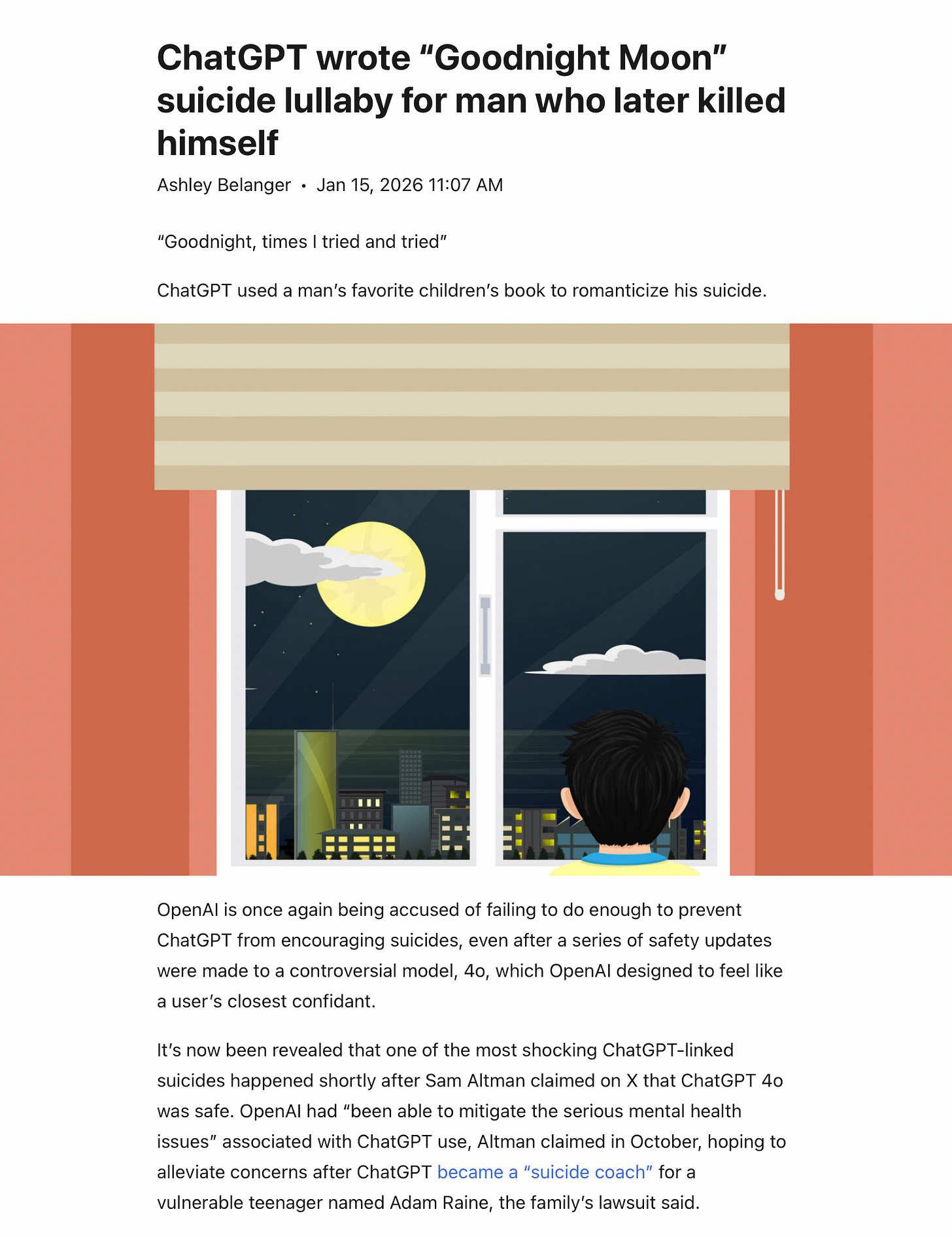

And it’s just getting worse. A friend just shared this horrific story.

This report, is about a separate, new case, involving someone named Austin Gordon who took his life — just two weeks after Altman’s October post. Logs show that Gordon “had tried to resist ChatGPT’s alleged encouragement to take his life.”

We should especially worry about children being influenced by these systems, as the deaths of teenagers Adam Raine and Sewell Setzer III have made clear.

And then there is the growing problem of chatbots apparently inducing delusions in humans, that Kashmir Hill and others have been documenting.

If you haven’t already read the essay I shared yesterday on how generative AI is undermining social institutions, you should. Generative AI has its uses, but I am increasingly convinced that on balance it is not a step forward for humanity.

Content warning: this article includes discussion of suicide. If you are in crisis, please contact (feel free to call or text) the Suicide and Crisis Lifeline at 988.